Don’t Reason Alone! Structured Reasoning via Multi-Agent Systems

Opinions based on our latest reasoning survey [1] and MAS-Zero [2]

Recent progress in large language models (LLMs) has renewed attention on agentic systems, where models go beyond token-level generation to exhibit interactive and autonomous behavior. This blog reflects on a growing trend: the shift from standalone LLMs to structured reasoning systems—both single-agent and multiagent systems that integrate planning, tool use, coordination, and more. While prompting techniques such as “think step-by-step” reveal that LLMs can perform reasoning, they often lack the ability to structure their thoughts effectively. As tasks become more complex, we argue that step-by-step reasoning alone is insufficient; what is needed is structured reasoning. While current “long-CoT” methods may help, multi-agent systems (MAS) potentially offer another approach to achieve higher-quality structured reasoning. We anticipate not only a trend from standalone LLMs to MAS, which leverage sophisticated collaboration among specialized agents, but also a reverse trend from “distilling” MAS back to standalone LLMs, where MAS can provide high-quality reasoning data for training and inference in standalone LLM.

Observed Trend and What is Happening?

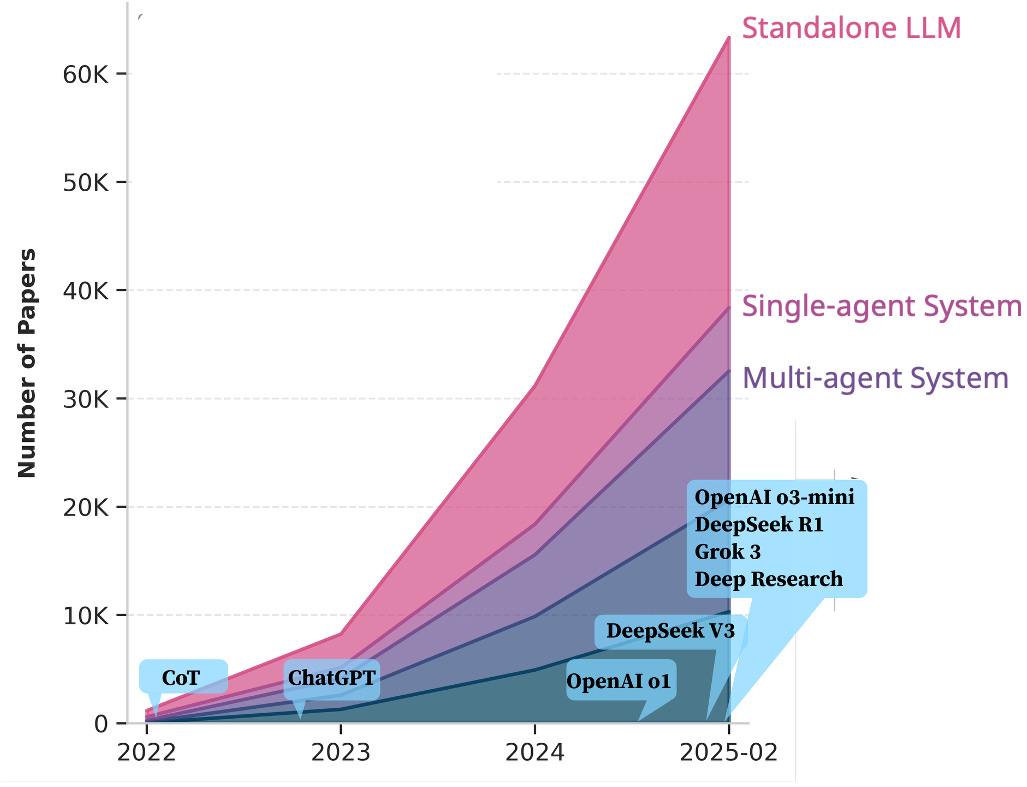

Almost one year ago, we were arguing that AI agents need to continuously update themselves in an autonomous fashion [3,4,5]. At the time, these seemed like distant goals. The path forward was unclear—we were still debating what an agent even is, and what it means for a model to “think,” let alone coordinate multiple agents. Now, a year later, we are beginning to see progress. Figure 1 shows a trendline from 2022 to early 2025. Interest in agentic systems—both single-agent and multi-agent—is not only rising, but accelerating.

So, what is happening?

Let us begin with the basics. What exactly is an agent? In [6], agenticness is defined as a spectrum, and [1] extends this view by identifying two core capacities: interactiveness and autonomy.

Interactiveness refers to an agent’s ability to engage with the external world, including environments or other agents. This capability is crucial because LLMs, while powerful, often have limited knowledge and reasoning abilities confined to their internal memory.

Autonomy on the other hand, refers to an agent’s ability not only to follow human instructions but also to independently initiate and execute actions.

With these in mind, what does the spectrum look like in practice?

Figure 2 provides a visualization of this spectrum. If we examine some representative points along it, we see:

Single-agent Systems: These involve interaction between one agent and its environment. The complexity of these interactions can vary significantly, from simple tool use to dynamic task execution with feedback loops.

Multi-agent Systems: These introduce an additional agent-agent interaction loop, where multiple agents communicate, coordinate, and influence each other’s behavior. In such systems, agents assume different roles, exchange messages, and collaboratively coordinate their actions while operating within a shared environment.

Remarks: These categories are hierarchical, not exclusive. All of them produce token-level outputs, but increasingly we observe high-level planning and structural patterns behind those tokens. These structures are something we have long known to be important, but only now are we beginning to see them emerge in practice.

Now, Why Is This Trend Emerging?

One common critique of autoregressive LLMs has been: is predicting the next token enough to model the world?

One intuitive idea to enable LLMs to think longer, consuming more tokens before arriving the final answers, so that LLMs can handle more complex tasks, explore intermediate steps, and reduce immediate errors by delaying final decisions.

This “thinking” ability can be easily evoked, even in standalone LLM. The simplest and still popular way is to add “think step-by-step” in the prompt.

However, relying on internal weights has many drawbacks, we do not want to rely solely on it but also on memory, tools, and external feedback. Single-agent systems are then designed to enable such interaction of external environment. The reasoning chains usually become longer and more complex.

When the task complexity further increases, agents are consuming thousands of tokens to “think,” it becomes essential to manage and coordinate that process. Simply stacking longer chains of thought (CoT) is no longer sufficient. Multi-agent systems address this by deploying multiple agents with different roles to handle different parts of the task. While still in their early stages, we can offer some reasons for why multi-agent systems are attracting increasing interest as standalone LLM or single-agent reasoning reaches its limits.

It could works! Many teams (including us, MAS-Zero [2]) are getting good results with MAS, and there’s nothing like results!

Context management:

There is a maximum context length limit for any LLM or agent, let alone when many tool calls are involved

Even though some LLMs today can accept very long input contexts (for instance, Gemini 1.5 Pro accepts 2M tokens), their ability to truly understand long, complex inputs is mixed.

Generalization across tasks and environments. The MAS offers a flexible framework for solving complex problems. Defining specialized roles and interactions for multiple agents introduces useful structure, going beyond step-by-step generation, and can potentially support generalization across both tasks and domains.

What Can We Learn from The Trend?

There are a lot of lessons one can learn from the trend. For example, reasoning is no longer just about generating more tokens, it is about structuring those tokens into coherent, adaptive processes. One important realization is that: step-by-step reasoning is simply not enough. This could be muted when the task is not that complex. But we are now reaching a point where tasks are quickly saturated and becoming more complex.

This shift has made us start to realize that scaling model size or lengthening responses alone does not guarantee better performance; Instead, we are observing a shift from token-level generation to structured, process-aware reasoning. Even though prompting with “think step by step” can improve short-term reasoning, it does not provide mechanisms for managing uncertainty, revising plans, or coordinating multiple subgoals. As tasks grow more complex, agents need to not only think, but also structure their thinking—deciding what to focus on, when to delegate, how to verify outcomes, and how to adjust when plans go wrong.

Thinking is natural for human beings, either “think out loud” or thinking implicitly. However, without practice/learning , one may not structure his/her own thoughts . Considering the example in Figure 3. The difference is not in the ability to think, but in the structure of the thinking. Without practicing, even natural thinking can become messy and unproductive.

From Step-by-step Reasoning to Structured Reasoning

To pursue such structured reasoning, there are multiple aspects we want to consider.1 Figure 4 shows a potential roadmap toward increasingly autonomous and structured thinking.

High-level Overview. At a high level, humans define what the task is. This includes not only the input prompt (e.g., a question) but also the setup: which LLMs or tools the machine might use, what initial components are available (e.g., a knowledge base, external APIs), and what reasoning workflows are allowed or encouraged (e.g., Chain-of-Thought, self-consistency, debate, self-refinement, or others). Given this task definition, the machine needs to figure out how to solve it. Over time, the level of autonomy and control shifts through the following stages:

Standalone LLM and Single-agent Systems. Humans provide the expected outputs so that the model can learn how to achieve the goal. This is done either through supervised learning (following human demonstrations) or reinforcement learning from verified rewards (exploring through interaction).

Manual Multi-agent System. To enable structured reasoning, humans may provide hand-crafted workflows or plans. However, this approach has limitations: human-designed plans may not align with the agent’s internal preferences or architecture, and these workflows are often hard to adapt to new or unseen tasks.

Automatic Multi-agent Systems. The agent autonomously discovers its own preferred planning strategies, without requiring explicit human supervision. This may happen through interaction with the environment or by evaluating and refining its own behavior across tasks.

Once agent-generated workflow emerges, it can be used as a new form of structured guidance—supporting not only the MAS but also improving reasoning for standalone LLM. Conceptually, This introduces an evolving trend:

Standalone LLM to MAS: from human supervision to automatic MAS. Machines generate high-level plans, i.e., MAS, that require minimal human intervention, reflecting their own reasoning preferences. This follows the shift from standalone LLM to single-agent system to multi-agent system, where reasoning becomes increasingly structured. One key challenge here is that unique ground-truth MAS does not exist. As a result, training cannot rely on human-designed MAS alone, but must instead smartly leverage the agent’s own experience. Eventually, we may see nonreasoning LLMs gain reasoning capabilities through MAS, and reasoning LMs improve further with the support of MAS.

MAS to Standalone LLM: MAS as structured guidance for reasoning. The generated plans can be executed directly, but they can also serve as structured guidance to help standalone LLM reason more effectively. For example, these MAS can improve the quality of reasoning by introducing higher levels of abstraction, enabling parallelism, or shortening the length of reasoning chains. Eventually, the reasoning data “distilled” from MAS may outperform current step-by-step long-CoT data.

MAS Moments

We might all have encountered this: you give a complex task to a strong LLM, and it produces an incorrect answer. You then try to “help” by, for example, manually writing the plan, decomposing the task, listing required knowledge, or even acting as a manual verifier, by explicitly telling the model that an answer is incorrect and needs to be reconsidered—yet none of these work.

In MAS-Zero [2], we observed something different. The system autonomously chose to collaborate in ways we had not anticipated—and succeeded. It was not following a human-designed workflow. It was not relying on external reward signals. It was a spontaneous, effective, and structured interaction among agents. That was a “MAS moment”—a surprising and genuine emergence of intelligent coordination.

Many of us had a “ChatGPT moment” shortly after its release, when interacting with it significantly exceeded our expectations of what AI could do. Later, we experienced an “AI agentic moment,” when an agent pivoted to using a search tool and completed the task by consulting external resources. I believe “MAS moments”, where the interaction and collaboration among agents surpass our expectations, are next. While some have not yet experienced these moments, I believe they will in the near future (check out our MAS-Zero!). The challenges ahead are significant, but I am confident that these moments will become increasingly common as MAS matures.

Reference

[1]: A survey of frontiers in llm reasoning: Inference scaling, learning to reason, and agentic systems.

[2]: Mas-Zero: Designing multi-agent systems with zero supervision.

[3]: Continual pre-training of language models.

[4]: Continual learning of natural language processing tasks: A survey

[5]: Demystifying Domain-adaptive Post-training for Financial LLMs

Acknowledgments

All opinions are my own and do not represent my employer or any of the individuals mentioned below. This blog would not have been possible without the support and leadership of Shafiq Joty, Caiming Xiong and Silvio Savarese from Salesforce Research. Many foundational ideas also stem from discussions with Yifei Ming, Austin Xu, and Xuan-Phi Nguyen from Salesforce Research, as well as the long-standing guidance of Prof. Bing Liu from UIC and Prof. Vincent Ng from UTD.

Citation

@article{ke2025reason,

title = {Don’t reason alone! Structured Reasoning via Multi-Agent Systems},

author = {Ke, Zixuan},

journal = {https://zixuanke.substack.com},

year = {2025},

month = {May},

url = "https://zixuanke.substack.com/p/dont-reason-alone-structured-reasoning"

}

Note that we use the term “structured thinking”, “planning” and “workflow” interchangeably to refer to the highlevel organization of the reasoning process.